Contents

Introduction

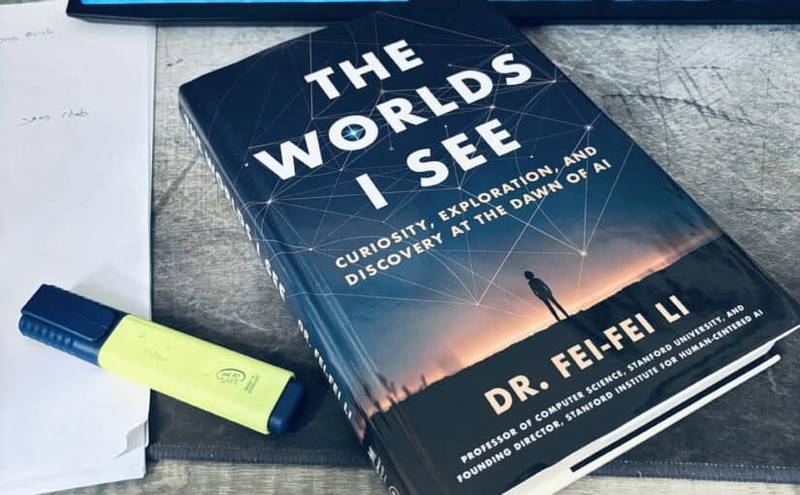

Fei-Fei Li’s The Worlds I See is more than just the memoir of a pioneering AI researcher—it’s a story of curiosity, perseverance, and the intersection between science, technology, and humanity. From her early inspirations drawn from physics legends like Einstein and Feynman to her groundbreaking work in computer vision and the creation of ImageNet, Li guides readers through the evolution of artificial intelligence, taking them on an engaging journey.

The book beautifully blends personal anecdotes with scientific discoveries, weaving together the historical development of neural networks, the philosophical implications of perception, and the ethical questions surrounding AI’s future. Li’s reflections on vision—as both a biological phenomenon and a computational challenge—underscore a profound realization: “To see is to understand”.

With thought-provoking insights on categorization and multimodal intelligence, The Worlds I See is not just a chronicle of technological progress but a testament to the spirit of exploration. Li leaves us with a powerful message—“Science, much like life, is a never-ending journey, always with new frontiers to chase.”

If you’d like to purchase the book on Amazon, please follow the links below:

1) Paperback

2) Hardback

Here are five key ideas from the book, explored through the broader lens of AI and machine learning.

Power of Data

Li’s main idea: “A model that can recognize everything needs data that includes everything”.

In The Worlds I See, Li’s vision for ImageNet stemmed from a fundamental insight: AI models are only as good as the data they learn from. The success of deep learning, particularly in computer vision, has been fueled by large-scale datasets. ImageNet played a transformative role in this, just as datasets like Common Crawl have done for NLP models such as GPT.

The rise of foundation models like GPT-4 and DALL·E echoes this principle. These models, trained on diverse and massive datasets, illustrate how AI systems improve by generalizing from a wide range of inputs. But as Li highlights, this dependence on data also raises key challenges—bias in training datasets can lead to biased AI, an issue the field is still grappling with today.

Vision is Not Just Seeing—It’s Understanding

A recurring theme in Li’s book is that vision is more than just the act of perceiving—it’s deeply tied to cognition. She connects this to neuroscience, referencing how the brain interprets sensory input to form meaning. This is a key distinction in AI: recognizing pixels is not the same as understanding an image.

Multimodal AI models like OpenAI’s CLIP (Contrastive Language-Image Pre-training) and Google DeepMind’s Flamingo aim to bridge the gap between vision and language by learning joint representations. They move beyond pixel classification to contextual understanding, much like how humans don’t just “see” but interpret and reason about the world.

Role of Categorization in Intelligence

One of Li’s key research questions was: How many categories should an AI learn to recognize? To answer this, she looked at language—specifically, how many unique words exist for describing objects. This cross-domain thinking led to ImageNet’s structure, which was inspired by WordNet. This idea is fundamental to modern AI applications like zero-shot learning, where models recognize novel objects by mapping them to known concepts. It also plays a role in self-supervised learning, where models learn representations without explicit labels, mimicking how humans generalize knowledge across categories.

“North Star” of AI: Learning from Few Examples

Li highlights the idea of one-shot learning—the ability of AI to learn from very few examples, just as humans can recognize a new object after seeing it once. This contrasts with traditional deep learning models, which require thousands or millions of labeled examples to generalize well.

Meta-learning (learning to learn) and few-shot learning techniques, such as those used in OpenAI’s GPT and Google’s PaLM (Pathways Language Model), aim to make AI more sample-efficient. The idea is to develop models that can generalize better from limited data—mirroring how a child doesn’t need to see a thousand different chairs to understand what a chair is.

Ethical and Humanistic Imperative of AI

In The Worlds I See, Li argues that AI must be developed with a focus on human benefit. She reflects on how motivations in AI research—whether academic curiosity, commercial profit, or societal progress—shape the trajectory of the field. The book warns against viewing AI as an entity that emerges on its own, rather than a tool shaped by human values.

This is at the heart of debates around AI safety and alignment. As AI systems become more powerful, questions of accountability, fairness, and governance become critical. OpenAI’s discussion on superalignment and the EU’s AI Act reflect ongoing efforts to ensure AI serves humanity rather than exacerbating inequalities.

Conclusion: Final Thoughts

One of the most profound takeaways from The Worlds I See is that the work of a scientist is never truly finished—there is always another frontier to explore. This mirrors the state of AI today: while we have made incredible strides, we are still far from achieving true Artificial General Intelligence (AGI).

Li’s journey—from an immigrant discovering her passion for science to shaping the future of AI—serves as both a historical account and a roadmap for where AI might go next. And just like her metaphor of the North Star, AI research is not about reaching a final destination but about constantly pushing the boundaries of what’s possible.

What do you think is the next North Star in AI?

If you’d like to purchase the book on Amazon, please follow the links below:

1) Paperback

2) Hardback

More Reviews

Htet Naing’s Blog | Home Page | A New Haven for Curious Explorers

Author: